what to do when acceptance rate is high metropolis hasting

A Practical Guide to MCMC Part 1: MCMC Basics

Markov Chain Monte-Carlo (MCMC) is an fine art, pure and unproblematic. Throughout my career I have learned several tricks and techniques from various "artists" of MCMC. In this guide I hope to impart some of that knowledge to newcomers to MCMC while at the same time learning/instruction about proper and pythonic code design. I also promise that this will truly exist a practical (i.e. little theoretical statistics knowledge needed) guide to MCMC. Past that, I mean that nosotros will treat MCMC as a tool not an area of statistical theory to study. To that stop we will forego nigh of the theoretical underpinnings of MCMCs and skip straight to implementation and optimization.

Overview

In this postal service you will:

- Get a brief introduction to MCMC techniques

- Understand and visualize the City-Hastings algorithm

- Implement a City-Hastings MCMC sampler from scratch

- Learn about basic MCMC diagnostics

- Run your MCMC and push its limits on various examples

Note: All textile in this post is generated from a Jupyter notebook that can be downloaded here.

Introduction

MCMCs are used to map out and sample probability distribution or probability density functions. The problem of performing probabilistic inference and plumbing fixtures models to information are ubiquitous in many areas of science, statistics, economics, and business organization. In curt, if y'all take a probabilistic model with unknown parameters, y'all will need MCMC (or similar techniques) to obtain probability distributions of those unknown parameters.

At present, there is a huge ecosystem of MCMC variants: Metropolis-Hastings sampling, Gibbs Sampling, ensemble sampling, parallel tempering, adaptive MCMC, Hamiltonian Monte-Carlo, Reversible Spring MCMC, etc, but don't panic yet; we volition start with a bones City-Hastings sampler and gradually build (in other posts) to more than sophisticated techniques. All of the techniques listed above can be quite useful in certain situations (and sometimes extremely catchy to implement: I'g looking at you Reversible Jump!) but I have plant that simple Urban center-Hastings with a bit of adaptation can handle a large array of high dimensional complex problems.

In this first post we volition build a unproblematic Metropolis Hastings sampler. Earlier we go to the specifics of this algorithm we need to lay down a few footing rules for MCMCs:

- MCMCs stochastically explore the parameter space in such a manner that the histogram of their samples produces the target distribution.

- Markovian: Development of the chain (i.e., collections of samples from ane iteration to the other) only depends on the electric current position and some transition probability distribution (i.e., how we move from one point in parameter space to another). This ways that the concatenation has no memory and past samples cannot be used to make up one's mind new positions in parameter infinite.

- The chain will converge to the target distribution if the transition probability is:

- irreducible: From any point in parameter infinite, we must be able to reach any other signal in the infinite in a finite number of steps.

- positive recurrent: For any point in parameter space, the expected number of steps for the chain to return to that betoken is finite. This means that the concatenation must be able to re-visit previously explored areas of parameter space.

- aperiodic: The number of steps to return to a given point must not be a multiple of some value \(yard\). This means that the chain cannot get caught in cycles.

Fright not, this is the almost technical thing that nosotros volition cover and all MCMC methods volition have these rules built in!

Metropolis Hastings MCMC

Suppose we have a target posterior distribution \(\pi(ten)\), where \(x\) here tin can be any drove of parameters (not a unmarried parameter). In gild to move around this parameter infinite nosotros must codify some proposal distribution \(q(x_{i+1}\mid x_i)\), that specifies the probability of moving to a bespeak in parameter space, \(x_{i+i}\) given that we are currently at \(x_i\). The Metropolis Hastings algorithm accepts a "bound" to \(x_{i+ane}\) with the following probability

\[\kappa(x_{i+1}\mid x_i) = \mathrm{min}\left(i, \frac{\pi(x_{i+1})q(x_i\mid x_{i+one})}{\pi(x_{i})q(x_{i+ane}\mid x_{i})}\correct) = \mathrm{min}(1, H),\]where the fraction above is called the Hastings ratio, \(H\). What the above expression means is that the probability of transitioning from point \(x_{i+1}\) given the current position \(x_i\) is a function of the ratio of the value of the posterior at the new point to the sometime point (i.e., \(\pi(x_{i+ane})/\pi(x_i)\)) and the ratio of the transition probabilities at the new point to the one-time indicate (i.e. \(q(x_i\mid x_{i+1})/q(x_{i+ane}\mid x_i)\)). Firstly, it is clear that if this ratio is \(>\) 1 then the bound will be accepted (i.east. the chain advances to \(x_{i+ane}\)). Secondly, the ratio of the target posteriors ensures that the concatenation will gradually move to loftier probability regions. Lastly, the ratio of the transition probabilities ensures that the chain is not influenced by "favored" locations in the proposal distribution function. If this last point is confusing, never worry, nosotros volition requite an example of this later. For now, rejoice in the fact that many proposal distributions are symmetric (i.e., \(q(x_{i+ane}\mid x_i) = q(x_i\mid x_{i+one})\)).

The metropolis hastings algorithm is then:

- Initialize parameters \(x_0\).

- for \(i=1\) to \(i=Due north\):

- Generate proposed parameters: \(x_* \sim q(x_*\mid x_i)\)

- Sample from uniform distribution: \(u\sim U(0, 1)\)

- Compute Hastings ratio: \(H=\frac{\pi(x_{*})q(x_i\mid x_{*})}{\pi(x_{i})q(x_{*}\mid x_{i})}\)

- if \(u < \mathrm{min}(one,H)\) then \(x_{i+1}=x_*\)

- else \(x_{i+1} = x_i\)

For each step in this loop, we draw a proposed parameters \(x_*\). We and so compute the Hastings ratio using this proposed point. By drawing the number \(u\sim U(0,i)\) we allow for a "jump" to \(x_*\) with probability \(\mathrm{min}(1, H)\). So if \(u < \mathrm{min}(ane,H)\) then the jump is accepted then we advance the chain \(x_{i+1}=x_*\), if information technology is not so we stay at the current position \(x_i\). If the proposal distribution \(q\) obeys the three rules higher up (irreducible, positive recurrent, and aperiodic) and then this algorithm is guaranteed to piece of work. Still, choosing smart proposal distributions is what makes MCMC an art!

Before we become into that, lets write some code for a very simple just illustrative example. Past the style, if you are new to this and so write this code yourself, don't just read it. Writing your ain simple MCMC lawmaking is the best way to learn and understand.

Uncomplicated Urban center Hastings sampler

The best way to acquire is to do. So lets make a elementary city hastings sampler. The code below implements the MH-sampler through a function mh_sampler. This office is simple, simply generic plenty to work with different kinds of posterior distributions and different kinds of bound proposals

def mh_sampler ( x0 , lnprob_fn , prop_fn , prop_fn_kwargs = {}, iterations = 100000 ): """Simple urban center hastings sampler. :param x0: Initial array of parameters. :param lnprob_fn: Office to compute log-posterior. :param prop_fn: Role to perform jumps. :param prop_fn_kwargs: Keyword arguments for proposal role :param iterations: Number of iterations to run sampler. Default=100000 :returns: (chain, credence, lnprob) tuple of parameter concatenation , credence charge per unit and log-posterior chain. """ # number of dimensions ndim = len ( x0 ) # initialize chain, acceptance rate and lnprob chain = np . zeros (( iterations , ndim )) lnprob = np . zeros ( iterations ) accept_rate = np . zeros ( iterations ) # commencement samples chain [ 0 ] = x0 lnprob0 = lnprob_fn ( x0 ) lnprob [ 0 ] = lnprob0 # start loop naccept = 0 for two in range ( i , iterations ): # propose x_star , factor = prop_fn ( x0 , ** prop_fn_kwargs ) # draw random uniform number u = np . random . uniform ( 0 , 1 ) # compute hastings ratio lnprob_star = lnprob_fn ( x_star ) H = np . exp ( lnprob_star - lnprob0 ) * cistron # have/refuse step (update acceptance counter) if u < H : x0 = x_star lnprob0 = lnprob_star naccept += 1 # update chain chain [ ii ] = x0 lnprob [ ii ] = lnprob0 accept_rate [ ii ] = naccept / ii return chain , accept_rate , lnprob The function follows the MH-algorithm exactly as written above. It volition render the chain samples (i.e. the columns are the parameters and the rows are the sampler iterations), acceptance rate per iteration and log-posterior values per iteration. By and large, this is all the information yous demand to produce inferences and check convergence and efficiency.

So, now nosotros take a MH-sampler function but we don't however have a proposal distribution. The simplest proposal distribution is just a draw from the prior distribution; notwithstanding, this is hugely inefficient, and since this is a applied guide lets do something useful. Next to a prior describe, a Gaussian proposal with fixed standard divergence is the simplest proposal. In fact, near all other sophisticated proposal distributions are still a Gaussian distribution, albeit without a constant standard difference / covariance. Nosotros will cover these in the next post only for now lets brand a elementary distribution.

def gaussian_proposal ( x , sigma = 0.one ): """ Gaussian proposal distribution. Draw new parameters from Gaussian distribution with mean at current position and standard deviation sigma. Since the hateful is the current position and the standard difference is fixed. This proposal is symmetric then the ratio of proposal densities is one. :param x: Parameter array :param sigma: Standard difference of Gaussian distribution. Can exist scalar or vector of length(x) :returns: (new parameters, ratio of proposal densities) """ # Draw x_star x_star = 10 + np . random . randn ( len ( x )) * sigma # proposal ratio factor is ane since leap is symmetric qxx = 1 render ( x_star , qxx ) This proposal is nearly as simple as it gets, mathematically it is:

\[q(x_*\mid x_i) = \textrm{Normal}(x_i, \sigma^2),\]that is, a Gaussian centered on the current position \(x_i\) with variance given by a standard divergence parameter \(\sigma\).

Ok at present we have our sampler and our proposal distribution, lets exam this out on a very uncomplicated ane-D Gaussian likelihood role with an unknown mean and unit of measurement variance. We volition employ a uniform prior on the mean \(\mu \sim U(-ten, 10)\). Of form, we don't actually need MCMC to sampler this distribution merely it works as a skillful example that is easy to visualize.

def simple_gaussian_lnpost ( 10 ): """ i-D Gaussian distribution with mean 0 std 1. Prior on mean is U(-10, x) :param x: Array of parameters :returns: log-posterior """ mu = 0 std = 1 if 10 < 10 and x > - 10 : return - 0.5 * ( ten - mu ) ** 2 / std ** ii else : return - 1e6 Visualizing the MH-step

Before nosotros run a long concatenation, lets try to visualize what it going on at each iteration.

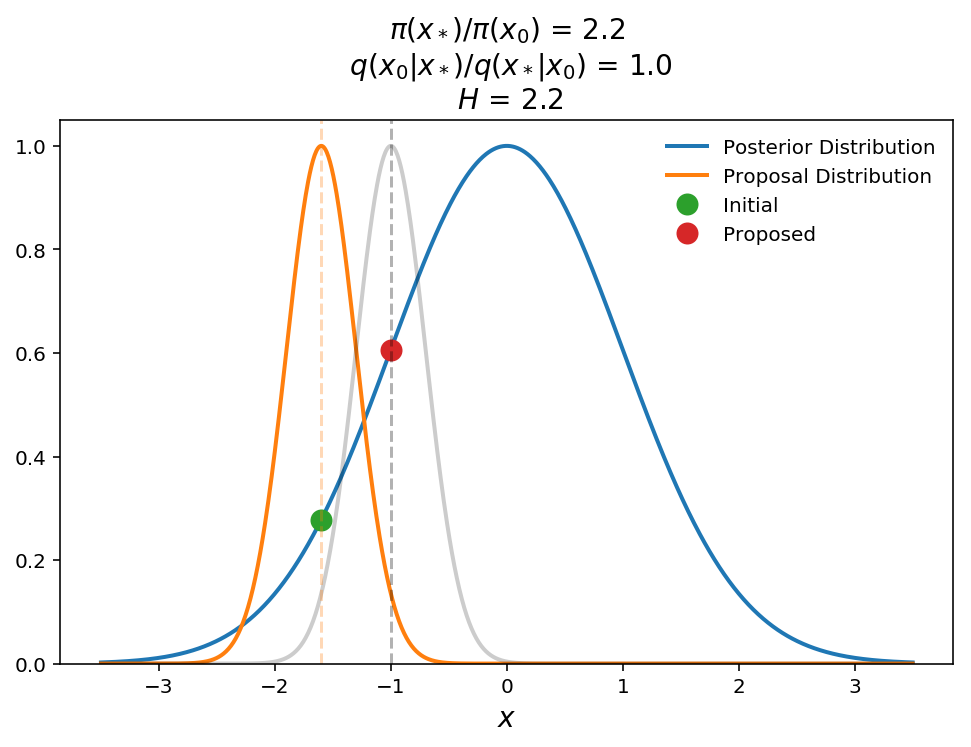

The plot above shows a schematic of a successful jump. The bluish shows the 1-D Gaussian posterior distribution, the orange is the Gaussian proposal distribution, \(q(x_*\mid x_0)\), (\(\sigma=0.5\)), and the green and red points are the initial and proposed parameters, respectively. For illustration purposes, the gray curve shows \(q(x_0\mid x_*)\) to testify the symmetry of the proposal distribution. In this case we encounter that the proposed point returns a Hastings ratio of ii.2 (i.e. transition probability of 1) and therefore the jump will be accustomed.

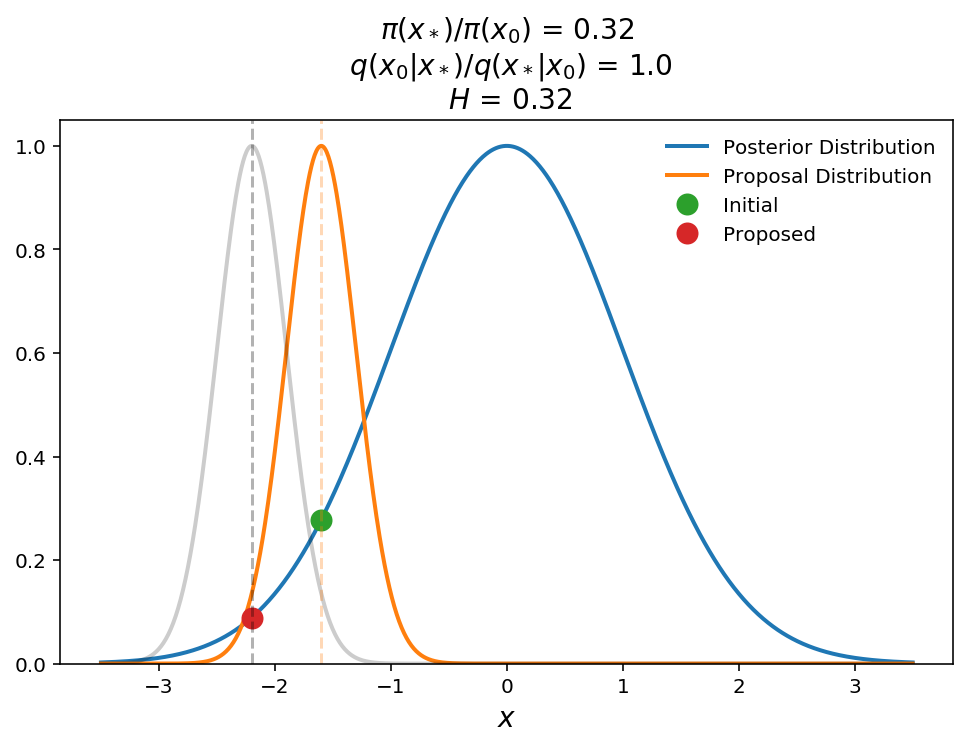

In this plot, we show what happens when a leap is proposed to a lower probability region. The transition probability is equal to the Hastings ratio here (retrieve transition probability is \(\mathrm{min}(1, H)\)) which is 0.32, which means that we volition motility to this new signal with 32% probability. This ability to movement back downward the posterior distribution is what allows MCMC to sample the full probability distribution instead of simply finding the global maximum.

As I promised above, lets give a quick demonstration of what happens with a non-symmetric proposal distribution. Lets suppose we have the same posterior but now we use a proposal from a fixed Gaussian distribution (i.eastward. the hateful does non modify with electric current position).

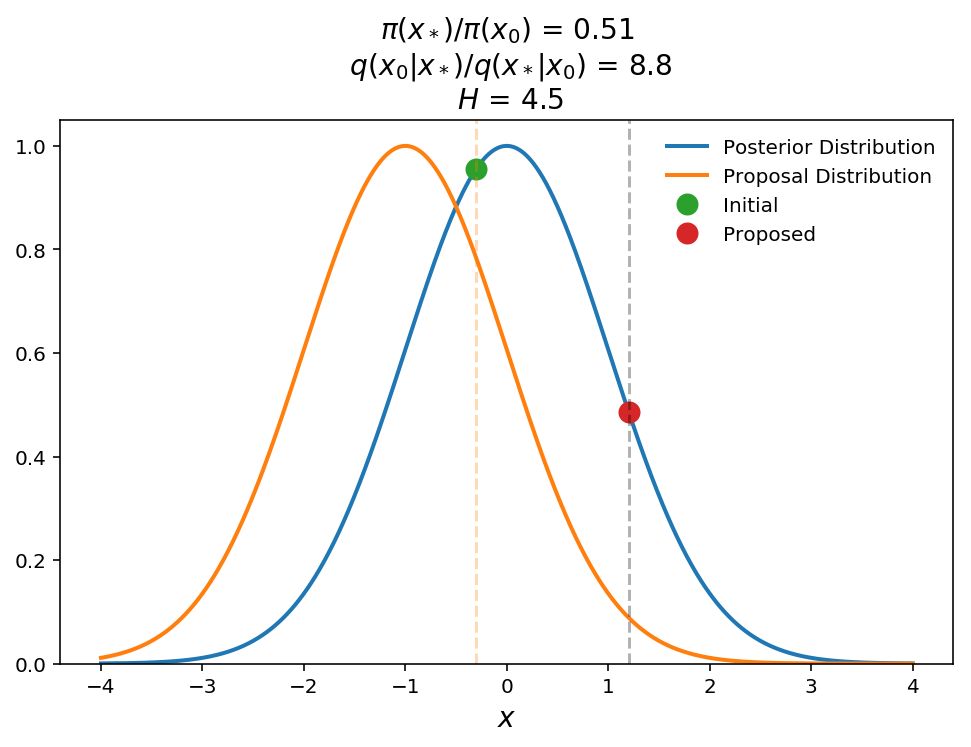

In the above plot nosotros show that the proposal distribution is a fixed Gaussian \(q(x_*\mid x_0)\sim \textrm{Normal(-ane, ane)}\). Here we show that even though the proposed signal is at a lower posterior probability than the initial indicate, the Hastings ratio is withal \(> 1\) (will accept jump with 100% probability). Qualitatively this makes sense considering we need to weight that proposed point college to have into business relationship for the fact that the proposed point is "difficult" to get to even though information technology still has a relatively high posterior probability value.

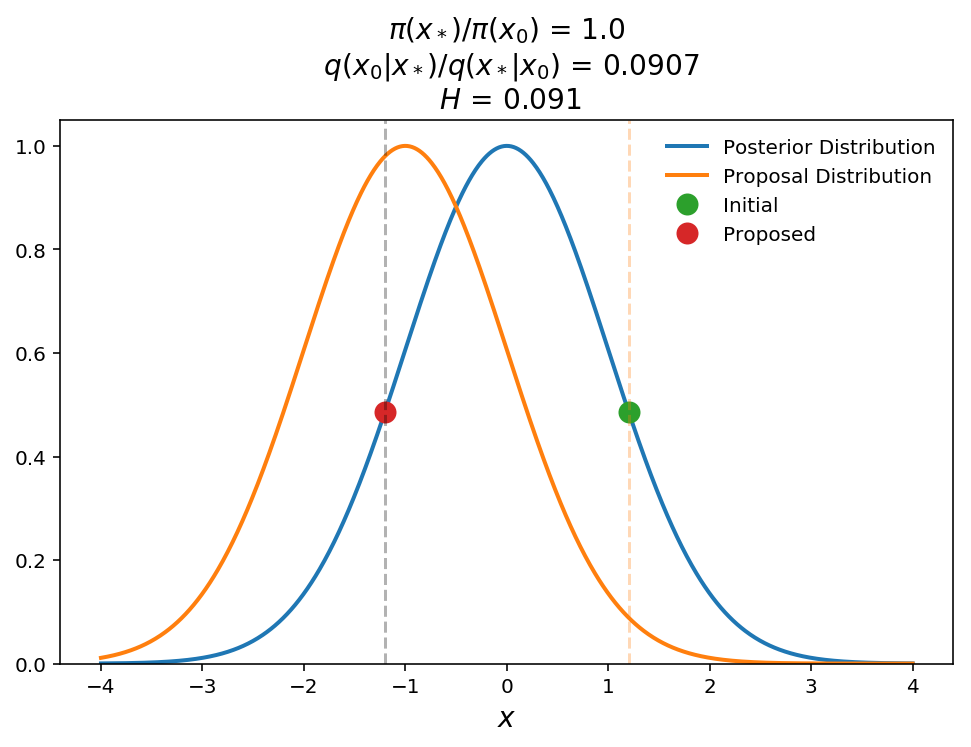

In this final case, we bear witness the contrary effect. In this case, even though the posterior probabilities are the same for the current and proposed points, the Hastings ratio is only 0.09 (i.east. 9% run a risk of accepting jump). Again, with some idea this makes sense. The proposed betoken must be weighted down because information technology is nearly the pinnacle of the proposal distribution (i.e. lots of points will be proposed around this position) and therefore is "piece of cake" to get to fifty-fifty though the posterior probability is no different than at the initial indicate.

In many cases we practise not need to worry nearly the proposal densities as many proposal distributions are symmetric but you always need to continue it in mind when amalgam your ain jump proposals as it can lead to biased results if not correctly taken into account. I will show how to easily check for the correct ratios later in this series.

Diagnosing efficiency and convergence

Now that we empathise the Metropolis Hastings pace. Lets actually run our sampler on the elementary 1-D gaussian likelihood. Since this is just a i-D trouble information technology is quite easy to determine the optimal jump size just lets explore unlike values for illustration purposes.

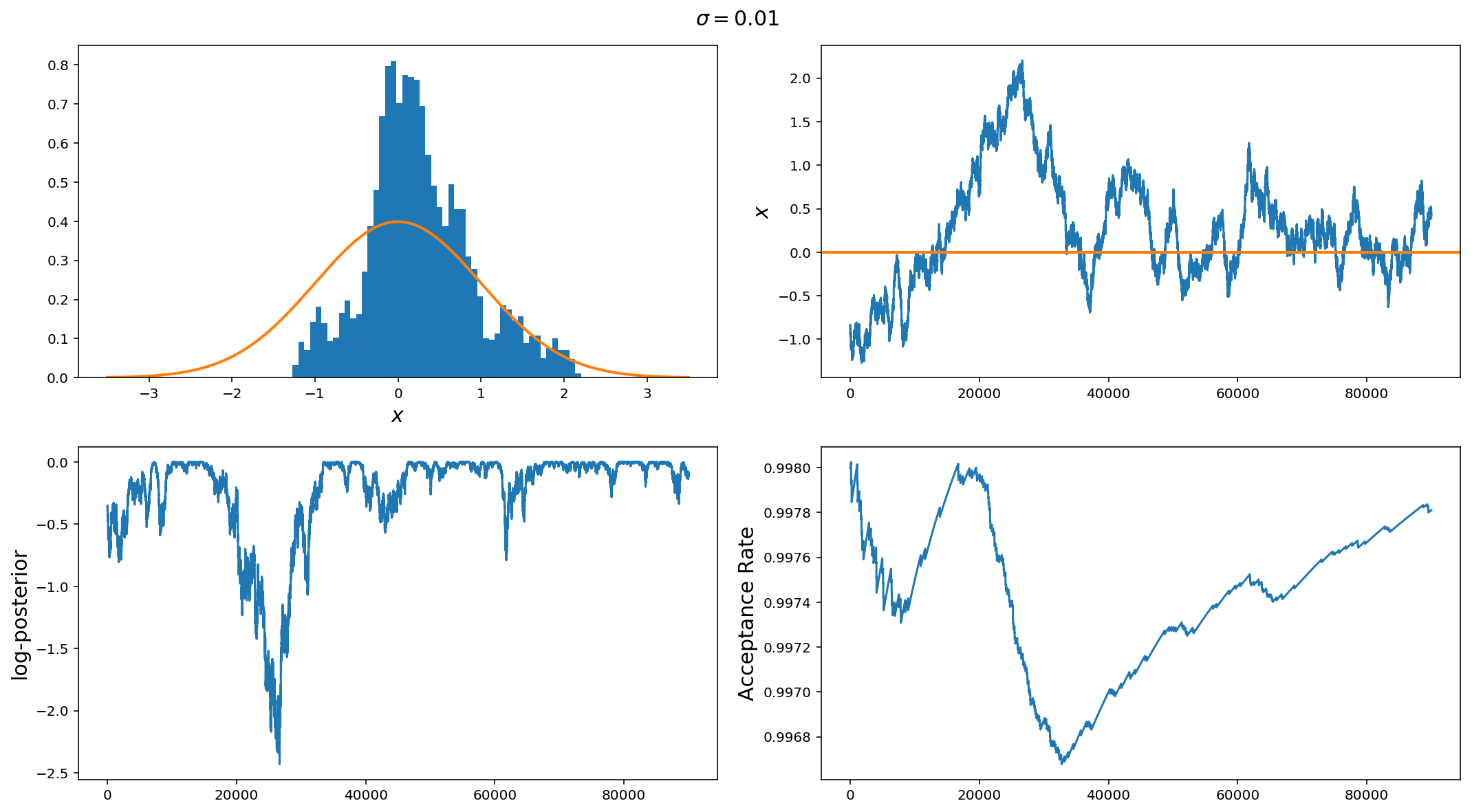

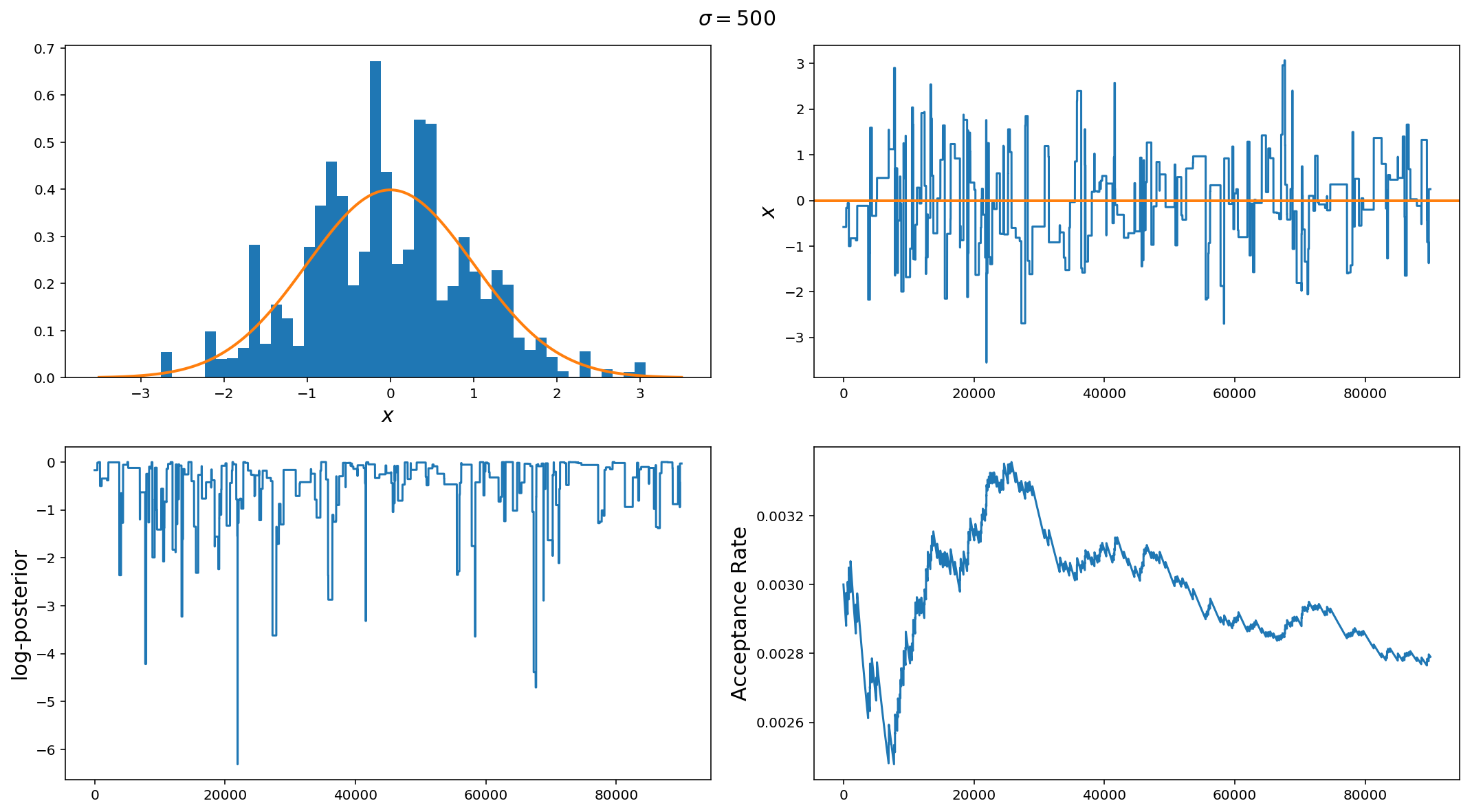

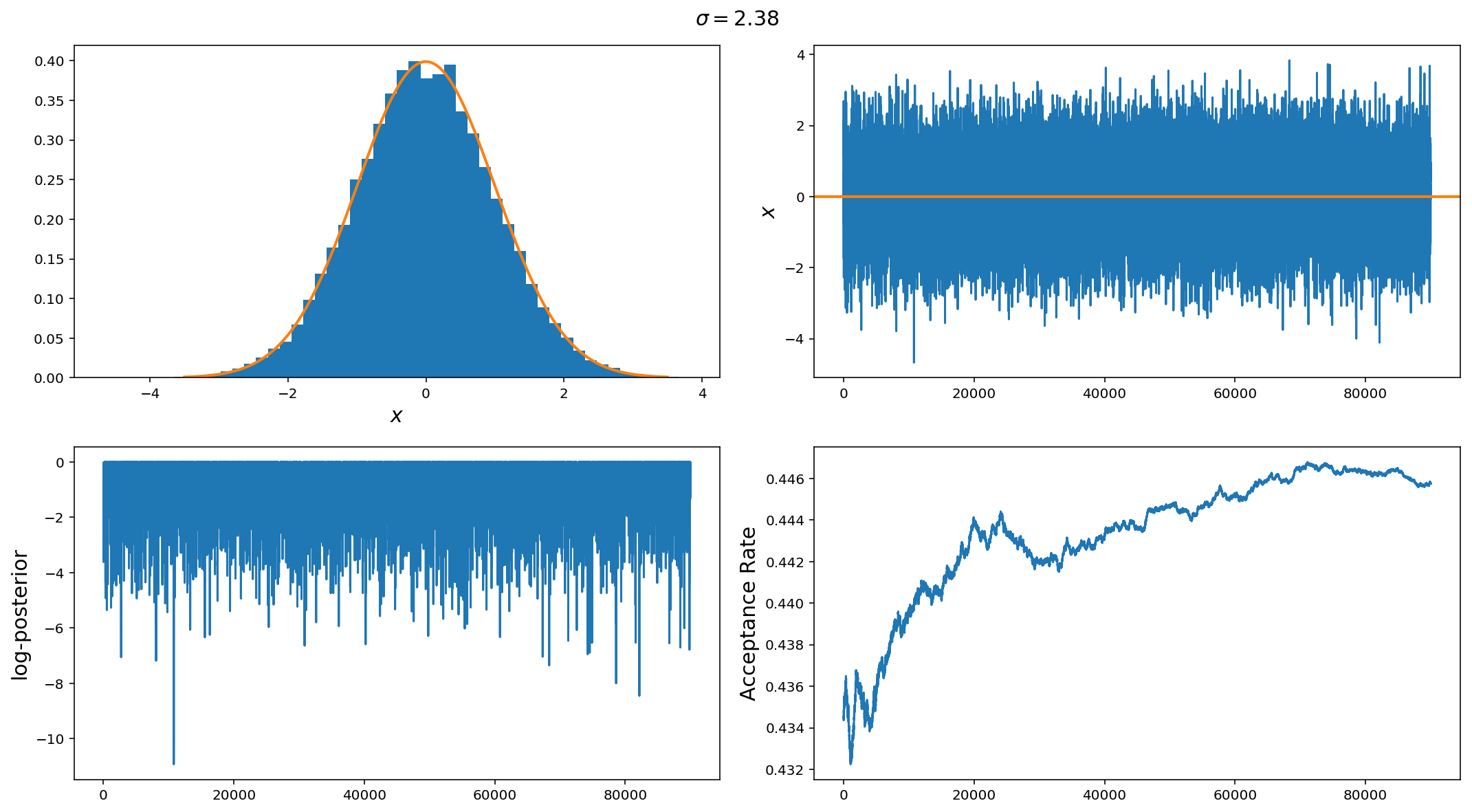

The panels are (from summit left to bottom right): Histogram of samples, sample vs iteration (i.e. trace plot), log-posterior vs iteration, and proposal acceptance charge per unit vs iteration.

In an optimally performing MCMC, the histogram of samples should converge to the posterior distribution, the trace of the chain should sample around the maximum of the posterior such that the samples are shut to i.i.d (independent, identical distribution). The log-posterior concatenation should be smoothly varying around the maximum. Lastly, the acceptance rate depends on the problem simply typically for ane-d problems, the acceptance rate should be around 44% (effectually 23% for more than five parameters). There are more than sophisticated means of diagnosing convergence (it gets harder with many parameters) which we will become into in further posts, but plots like this get you a long manner to diagnosing problems with your sampler.

In this example we have used a spring standard deviation of 0.01 (remember the standard deviation of the posterior) is 1.0. And then, it is clear that the histogram of samples is very poorly reproducing the posterior, the chain trace has big timescale (iteration scale) correlations (i.e. it very slowly varies around the mean). We can as well run into that around the middle of the run, the chain drifted off from the high probability region (lesser left plot) and it took quite a large number of samples to get back because of the small jump size. Lastly, we can run into that the acceptance rate is 99%.

Overall, if you lot meet something like this, the first step is to increment the jump proposal size.

Ok, and so \(\sigma=0.01\) was too pocket-sized, what most \(\sigma=500\)? Hither nosotros see the contrary of the first instance. The leap size hither is way too big. While we did do a better job at recovering the posterior, we can encounter from the choppiness of the trace plots and low credence charge per unit that this run is very inefficient every bit it spends a lot of time at fixed locations in parameter infinite and rarely moves.

If y'all see something like this, the outset thing to do is to decrease the jump proposal size.

Finally, we have a good jump proposal size. Actually this is the optimal footstep size for a gaussian distribution \(\sigma_{\rm leap} = ii.38 \sigma_{\rm posterior} / n_{\rm dim}\). In this case we tin can see that the samples are near perfect draws from the posterior distribution, the chain trace is nearly i.i.d and the acceptance rate is 44%

The above illustrates the point merely it is and then easy that it may be hard to see why MCMCs are and then of import simply difficult to implement efficiently. Earlier we wrap up for this week, lets look at two more, slightly hard examples.

A (slightly) more complicated trouble

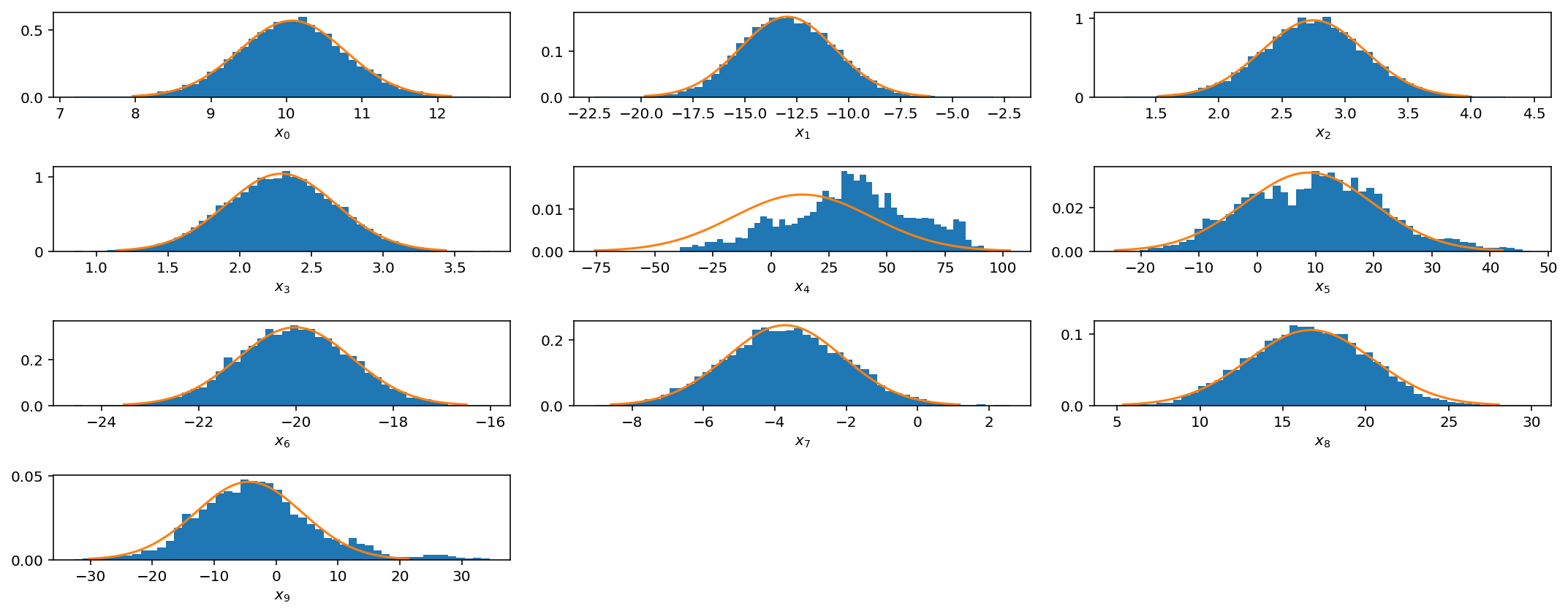

We have tacked a 1-D Gaussian posterior. Lets at present look at a 10-D problem. This posterior distribution has the following properties

\[\sigma^ii \sim \textrm{LogNormal}(0,one.5)\\ \mu \sim \textrm{Normal}(0, 10)\]That is information technology is a gaussian with variance fatigued from a Log-Normal distribution and the means are drawn from a normal distribution with 0 hateful and variance 10. This way, we can accept multiple scales present in the posterior.

class multi_gaussian_lnpost : """ N-Dimensional Gaussian distribution with mu ~ Normal(0, x) var ~ LogNormal(0, i.five) Prior on hateful is U(-500, 500) :param ndim: Numver of dimensions to gaussian (default=ten) :param seed: Random number seed for reproducible results. """ def __init__ ( self , ndim = x , seed = 12345 ): np . random . seed ( seed ) self . var = x ** ( np . random . randn ( ndim ) * ane.five ) self . mu = scipy . stats . norm ( loc = 0 , scale = 10 ). rvs ( ndim ) def __call__ ( self , x ): """ Call multivariate normal posterior. :param x: Assortment of parameters :returns: log-posterior """ if np . all ( x < 500 ) and np . all ( 10 > - 500 ): return scipy . stats . multivariate_normal ( hateful = cocky . mu , cov = self . var ). logpdf ( x ) else : return - 1e6

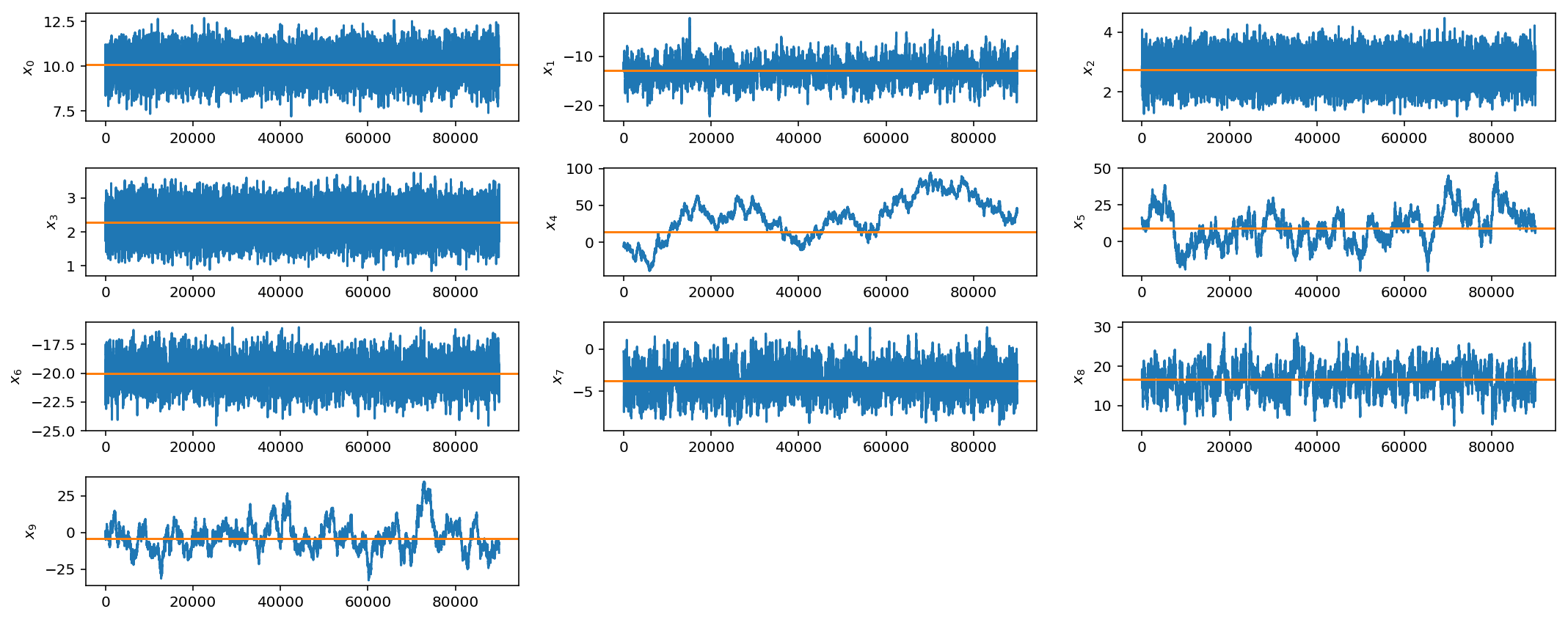

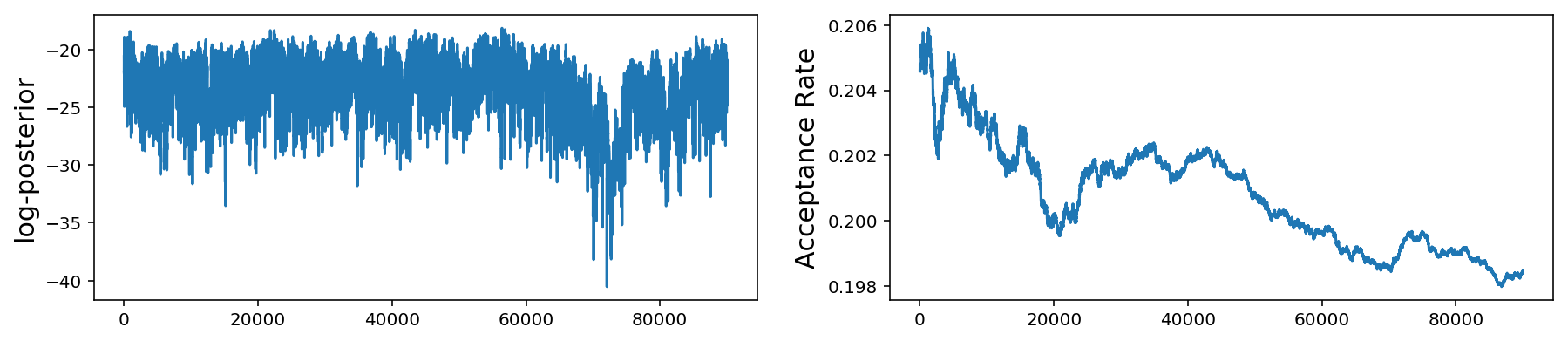

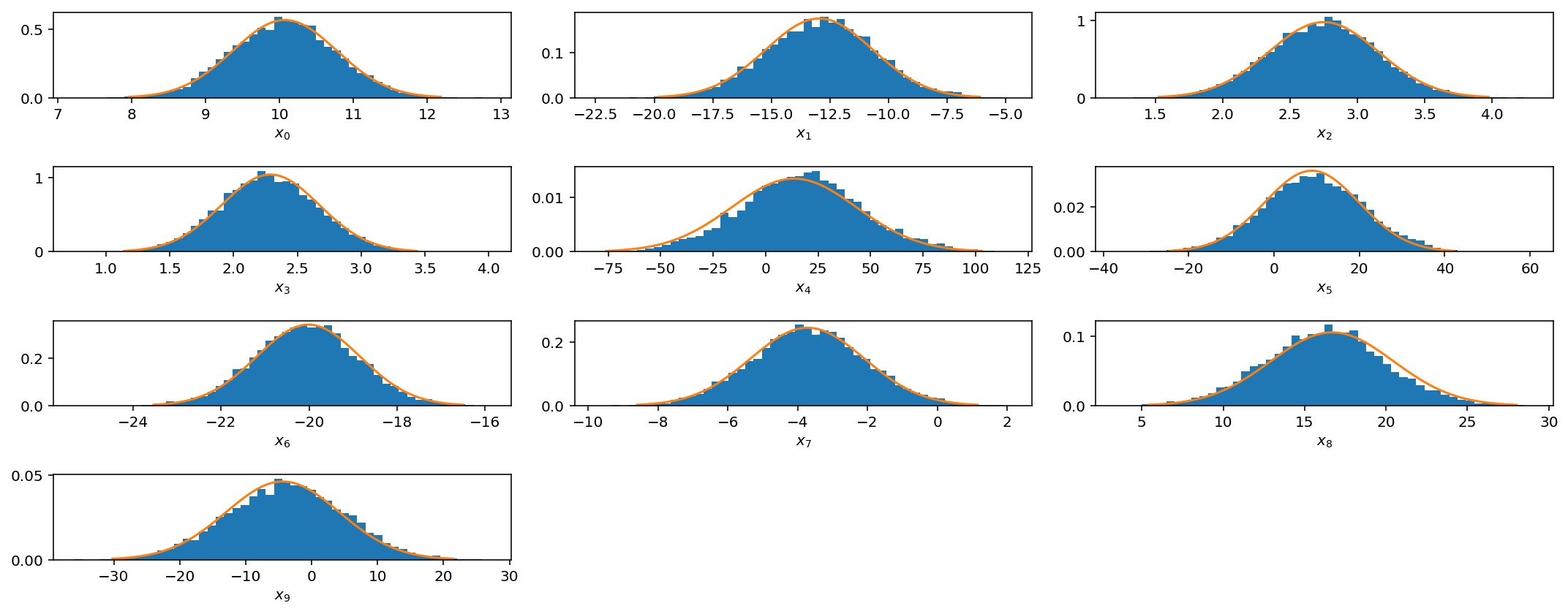

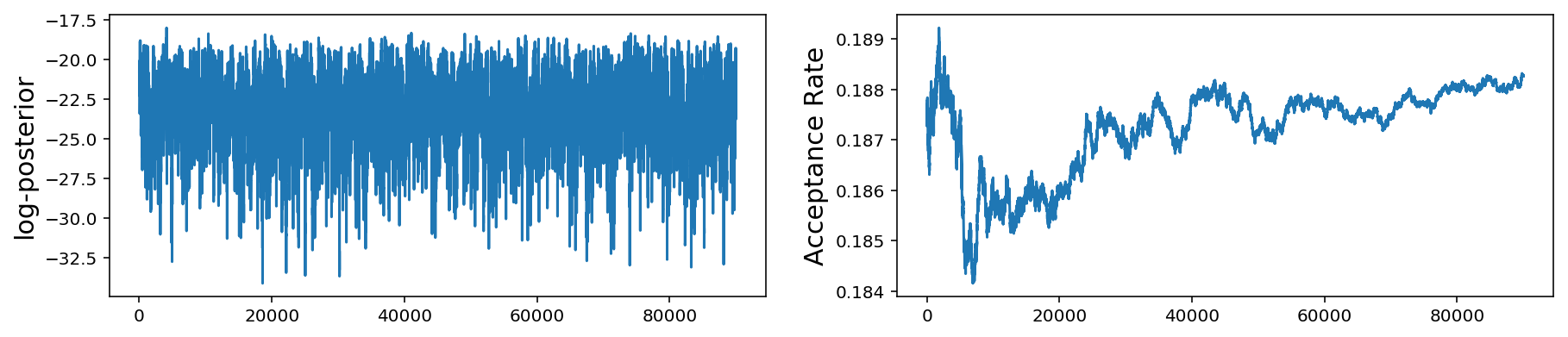

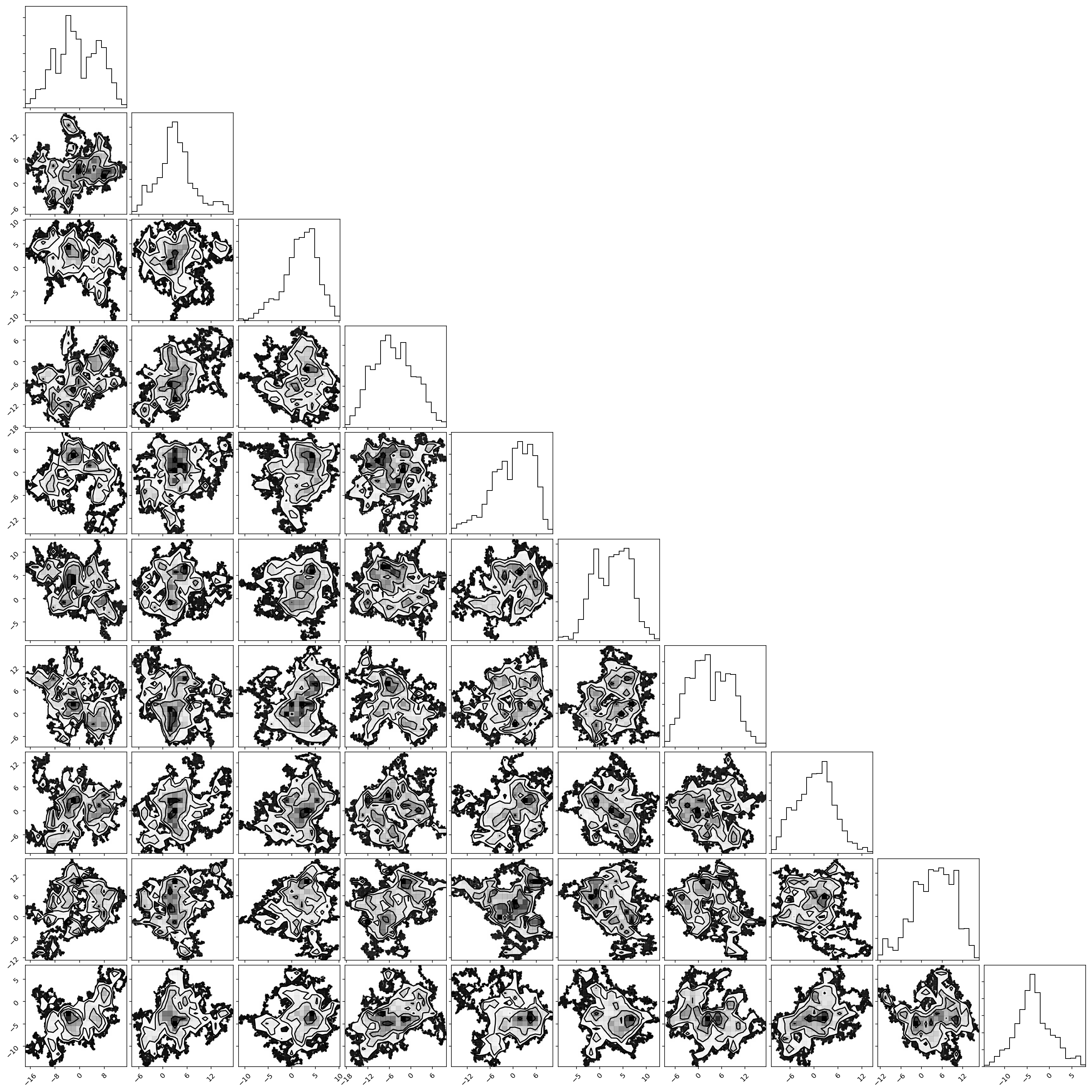

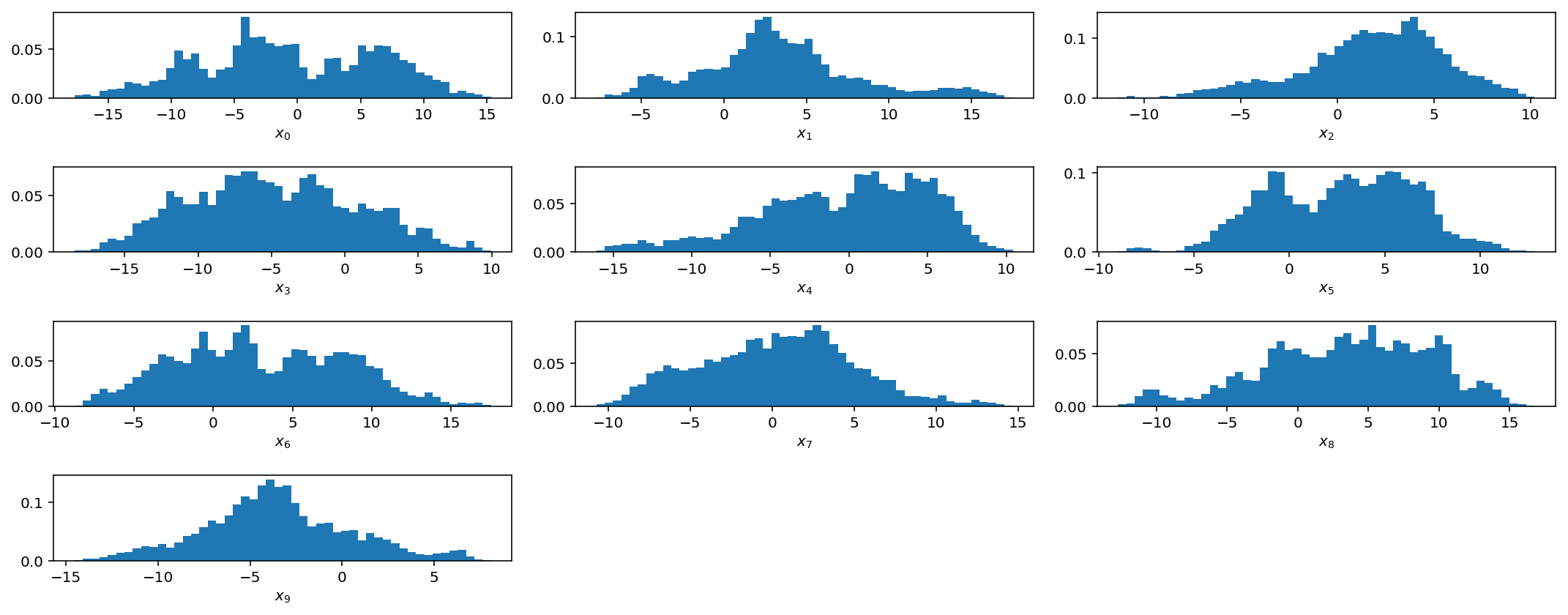

These plots are the same as those for the ane-D trouble just now nosotros show the histogram and trace for all 10 mean parameters. As earlier, the lesser plot shows the log-posterior vs iteration and the acceptance rate vs iteration.

We come across that even if we have multiple scales present, we can yet do adequately well with our very simple jump proposal with a single step size. However equally we get to larger parameter spaces this begins to pose a trouble.

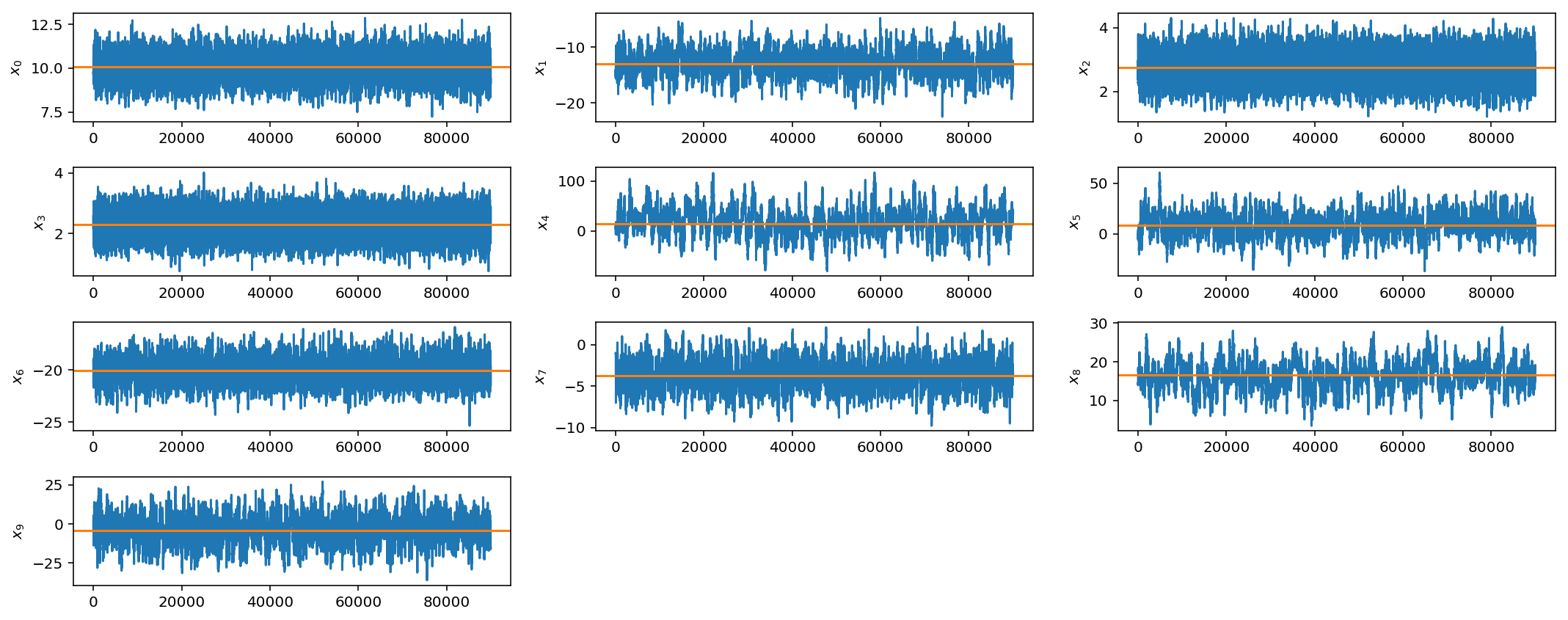

However, if you encounter these kinds of results, it would be all-time to endeavour different spring sizes for different parameters. For example, I will increase the spring size for parameters \(x_4\), \(x_5\), and \(x_9\).

OK, not perfect, only better. As you tin imagine, this can get quite tedious when you have larger parameter spaces and more than complicated posterior distributions. In subsequently posts we volition discuss how to automate this tuning process via adaptive jump proposals.

To wrap out this week though lets move to a slightly more realistic example: not-petty covariance matrix and larger parameter infinite.

Covariant parameters in high dimensions

This scenario is more realistic. In many cases, parameters have some covariance with one another and fancy techniques and tricks are really needed in high dimensions.

Here we will sample from a 50-dimensional gaussian distribution with non-trivial covariance matrix.

class corr_gaussian_lnpost : """ N-D Gaussian distribution with ways drawn from mu ~ Normal(0, one) and non-trivial dense covariance matrix. Prior on mean is U(-50, 50) :param ndim: Dimension of multivariate gaussian (default=10) :param seed: Random number seed for reproducible distribution """ def __init__ ( self , ndim = 50 , seed = 12343 ): np . random . seed ( seed ) cocky . mu = np . random . rand ( ndim ) Thousand = np . random . randn ( ndim , ndim ) self . cov = np . dot ( Chiliad , One thousand . T ) def __call__ ( self , x ): """Render multivariate-normal log posterior :param x: Array of parameters :returns: log-posterior """ if np . all ( 10 < 50 ) and np . all ( 10 > - 50 ): return scipy . stats . multivariate_normal ( hateful = cocky . mu , cov = cocky . cov ). logpdf ( x ) else : return - 1e6

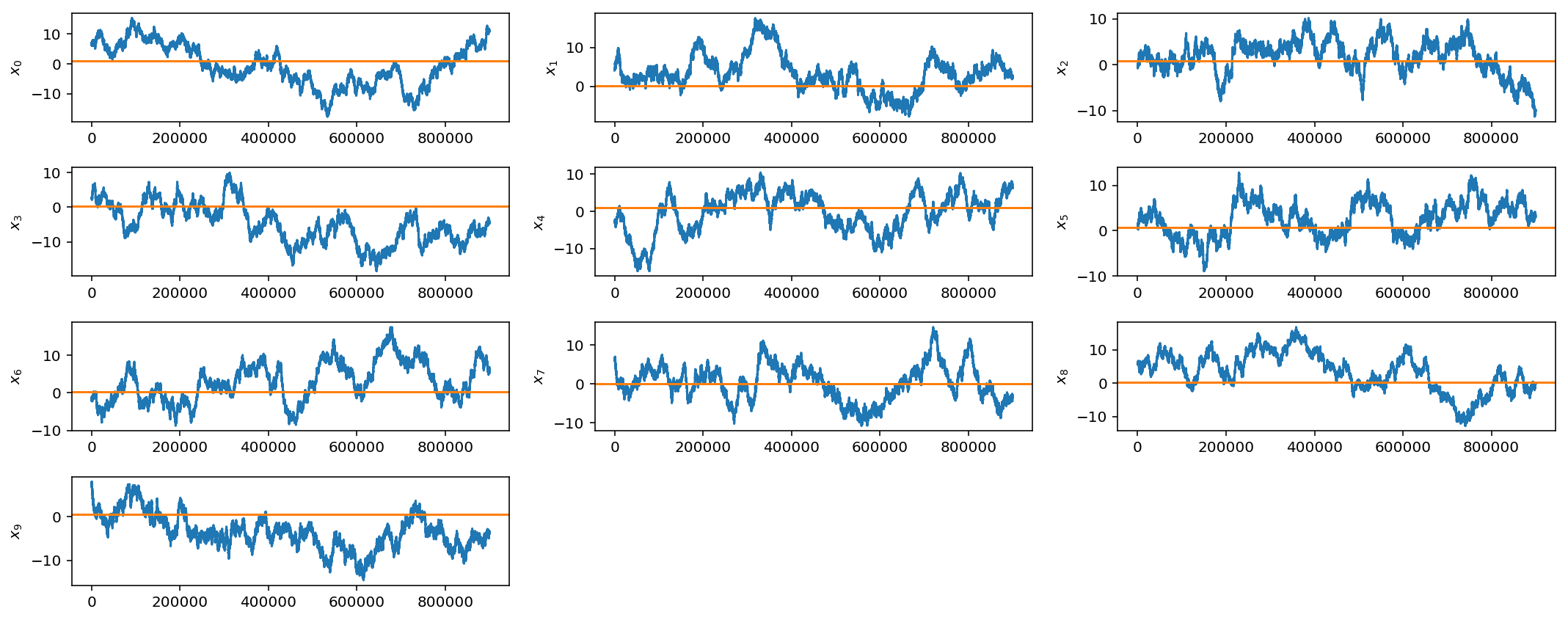

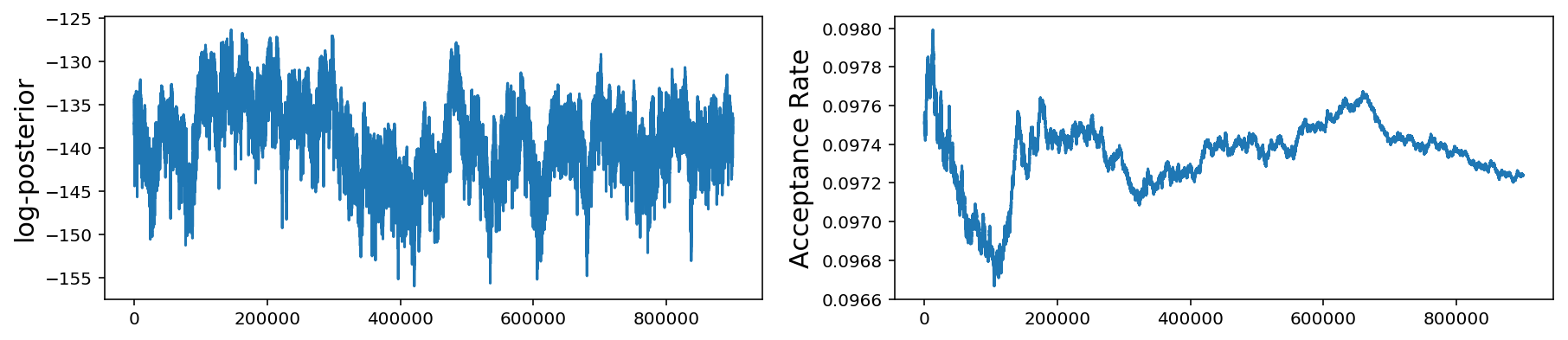

Above, we but plot the offset 10 parameters but information technology is obvious that, even after one meg samples, our gaussian jump proposal is not working very well at all as the chains have non converged.

We volition pick up with this problem next week when we introduce adaptive bound proposals and spring schedules.

Update Jan sixteen, 2021: Unfortunately, a fully-fledged Part 2 to this post never came about but you can check out some further resource hither.

Source: https://jellis18.github.io/post/2018-01-02-mcmc-part1/

0 Response to "what to do when acceptance rate is high metropolis hasting"

Post a Comment